Tutorials

Follow along to learn how to use Depthkit volumetric video software for fast and authentic 3D content creation.

For more in-depth resources, browse our Documentation.

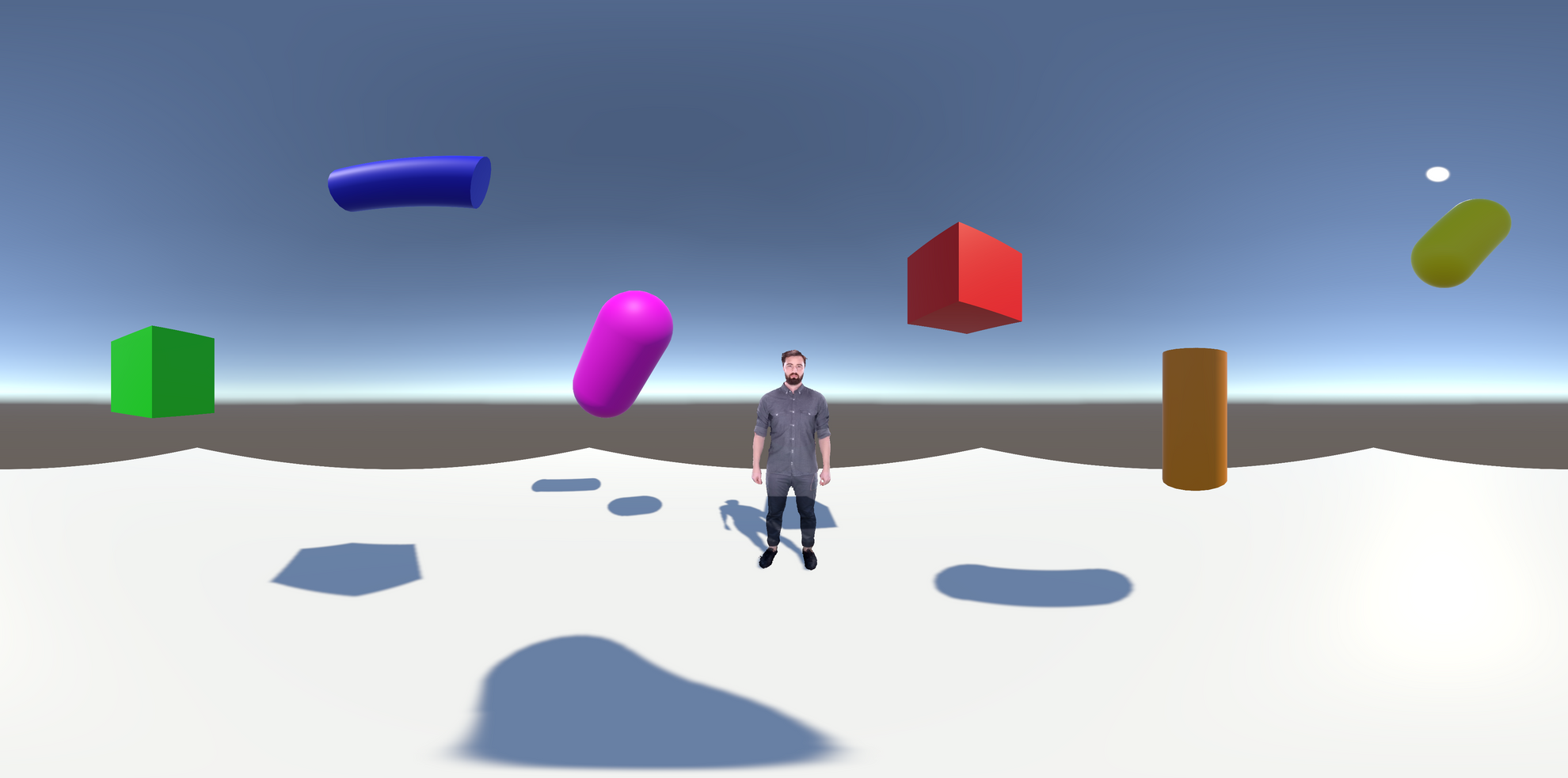

Learn how to bring your Depthkit clips into Unity game engine.

Depthkit Core Expansion Package for Unity →

Depthkit Studio Expansion Package for Unity →